Processing larger HL7v2 data feeds efficiently

“Tina, eat. Food. Eat the FOOD!” - Napoleon Dynamite

Recently at work, I was reading an evaluation of a vendor pilot. If it helps to frame your mindset around who has these types of problems, this was a relatively well-known vendor and not a brand-new startup. The vendor indicated that they did not want to process one million HL7v2 messages per day to power their EHR software add-on, indicating that it was too much data for them to handle.

Too much data! It’s approximately 16GB of data. That’s 4 HD movies or downloading a season of a TV show before a flight. It’s not that much data.

There are challenges to this type of data integration, notably that HL7v2 must be processed synchronously to manage data synchronicity with the sending system. However, they are easily remediable. Being able to solve these problems easily will make you a better integrated vendor and partner to your health systems. Here’s what you can do to process data more efficiently so that you can confidently tell a health system that you can process 20 million messages a day with no problem.

Avoid using HL7v2 to build patient lists when possible

The most common use cases that require ingesting massive amounts of HL7v2 data are building patient lists. A health system will send the vendor the entirety of their registration or scheduling feed and then will have the vendor chop that down to something more manageable for consumption. While this is the most standard approach to building patient lists, it is not always the best approach in 2024. Instead:

Try pulling from existing system lists using something like Epic’s Patient List API to get the census of a given unit. It involves knowing which list to pull, but in order to build a patient list you need to do some semantic data mapping anyways so you might as well use something that is already good. You can do something similar with appointments in Cerner and in Veradigm/Old Allscripts Amb stuff.1 Unless your application absolutely requires real-time data I would try to avoid synching data via HL7 at all costs now. If you have an HL7v2 mechanism to take care of this, don’t mothball it because you may still need it, but there are better ways to handle this nowadays.

Build SMART-on-FHIR Integrations for EHR-Centric flows if your application use point involves the EHR, use SMART-on-FHIR to establish application context instead of ingesting an entire registration feed. If your application is a part of every appointment, this is the best way to build a seamless jump-off point with your app.

Instead of using big lists for limited use cases, just use single patient search tools if your application isn’t always in use for every patient and it’s hard to define which patients are going to be on a list, building a patient list isn’t the most efficient use of everyone’s time. We had situations like this at my last job where we had ultrasound carts that floated between floors for intermittent use (~1-2 exams per day). To populate data to the cart, we’d build these huge patient lists with every patient in the hospital on them and have the user filter through them. It wasn’t a great use of time, and it provided a bad user experience. Instead, single patient lookup using something like the Patient.search FHIR query would have worked better. This isn’t a forever decision. If volume ever increases, then migrate those users to lists.

But what if HL7v2 is your only option? How can you make the data flow seem performant without angering your customer IT counterparts for slow ADT feeds?

Good HL7v2 data flow

There are two types of data integrations powering patient lists in terms of criticality. They are:

1) High Priority: This is your ADT feed to the EHR (if they are separate systems). Nothing can be documented on a patient and prescriptions and orders can’t be processed correctly if the patient’s information is incorrect. These transactions must process effectively synchronously.

2 Lower Priority: This is what most applications need in terms of data priority. The UI could be minutes behind the data feed to no ill-outcome. Most documentation is occurring far after the fact and wouldn’t be sensitive to any time immediacy. Even in the event that data was far delayed or incorrect, clinical workflows would not be impacted (basically, the receiving software is used for LMR completeness). I would refer to the criticality of these integrations in the past as “Escalators, Temporarily Stairs”.

The integration serves for convenience both contractually and functionally and would not delay workflows significantly if not 100% accurate or up to date. This isn’t an excuse to build a bad product or data feed; just as a setup to our soon to be recommended setup.

To demonstrate my recommended approach, I’ll be using a Mirth launched on an ordinary Linux VM, running Postgres. I’ve written in the past how an interface engine is a good idea, which I still believe. Unless your app runs entirely on an API/FHIR integrations, you want something to tune interfaces without R&D. Whether you or a managed vendor handles it for you is up to you.

Separating ingestion from processing

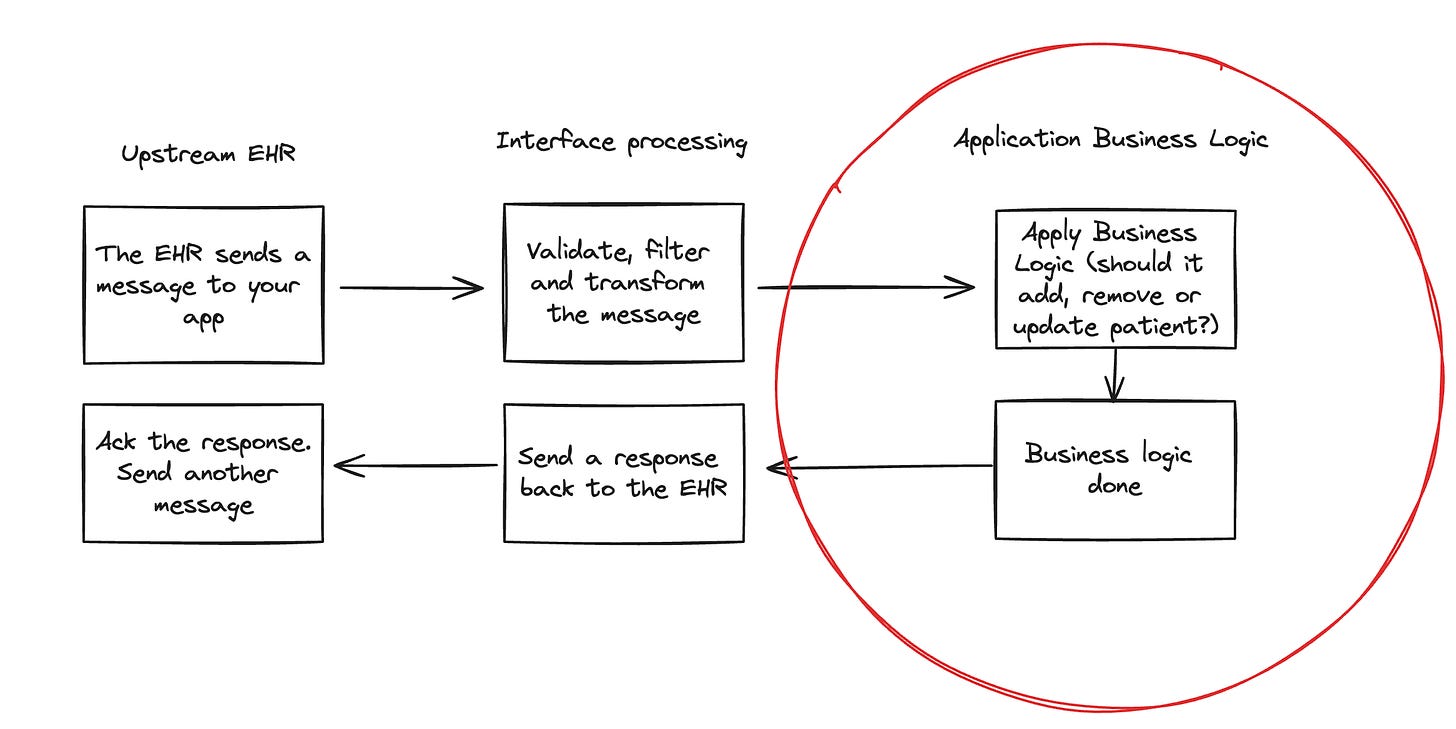

Let’s quickly go over the lifecycle of an HL7v2 message:

Pretty straightforward. Usually the culprit in slowness is here:

Some causes:

Overhead in service-to-service transactions between the HL7 ingestion service and the rest of the backend. This is especially true if the handoff mechanism between these two services is unoptimized HTTPS POSTs.2

The business logic takes a while to evaluate, either due to complexity or inefficiency. This makes some amount of sense since it is important to do some validation on messages to ensure that old/bad messages are not being sent (trying to admit a patient that’s already admitted, discharging an already discharged patient, etc.)

The flip side of this is that the ingestion component just works better, either due to superior out of the box code in most interface engines optimized for queueing or a focus on this due to its unknown nature from some software engineering teams. The engineers building the feature may not have understood the pure volume or lack of parallelization with HL7v2 messages and got the first stab wrong.

Of course, you can always optimize your code that processes the business logic of a patient list. But there is a faster solution to optimize interfaces immediately.

Aggressively filter huge feeds

On my 2GB/1CPU VM, using a sender channel to send an HL7v2 message to an ADT channel with no transformer logic takes about ~20ms. That means that so long as data was sent purely linearly, we could process ~ 4,320,000 messages per day on one channel. Nice! That is probably enough for most smaller and probably even mid-size organizations. It’s worth noting that if you put some more power into your Mirth and your postgres, you’ll probably see even better performance here than 20ms per message.

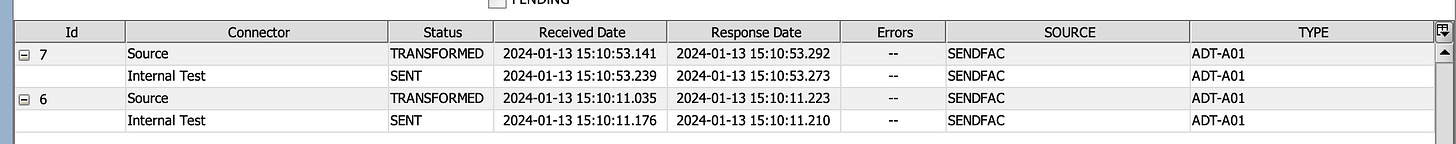

What happens if we add some transformer logic? Even some chunky transformer logic. I dumped in a whole multi KLOC piece of code that JSON-ifies an HL7 message. Unsurprisingly, our performance goes down drastically:

We are now at ~130ms of processing time for the same ADT message. We can now only process ~750,000 messages per day. Less good and honestly probably not good enough for most organizations sending a full ADT feed. Let’s imagine that this is our unoptimized channel to improve.

The first thing we should do is ignore messages that our application will never process. Is your application only live in certain departments? Filter them! Do you not need to process transfers and only need currently admitted patients? Filter them! Do you have no idea what an ADT^A21 message does even though you’ve been a data integration professional for years! That’s fine. Do you not want to process Leave of Absences because you have no idea how that works practically? Don’t!3 As a vendor you need to support as much data semantics as your users need to understand. If your user journeys deal with pre-admission tasks, you may care about pre-admissions. If your user journeys only deal with in-person patients, you may not.

In our case, let’s build a basic filter that only accepts messages in “Med Surg”, which is the only department our fictional software is live in. This is easy to build in Mirth Connect:

Filtering a message in Mirth in a channel that does a lot of stuff is not as efficient as not receiving the message at all. But, we get some efficiencies here, now processing filtered messages in ~40ms on our tiny Mirth box. Assuming that we don’t need to eat the majority of the HL7 feed we are receiving, we can probably safely indicate we can process millions of messages again.

But Mark, you may ask, “I don’t want my team to be responsible for managing filters. It’s nitpicky and I don’t want to be responsible for it.” My general feedback:

For most use cases, it’s not that bad and you should be able to build any filters quickly. The biggest thing is ensuring that you tell the customer that they are responsible for telling you what the filters should be. That is usually the time-consuming part, and you should ensure the customer knows that that is their responsibility. If you do that, you can probably manage hundreds of thousands of filters with a skeleton crew.

If you think the filters are going to change dynamically or for whatever reason you think the change management of doing this yourself is non-tenable in a SaaS solution, there’s nothing from stopping you putting this in “customer space”. Build the filter list into your application organizational config and then load that into Mirth either in an accessible database or by loading it into a global variable for reference.

What if filters aren’t enough? Let’s try something else.

Changing ACK order

Going back to our previous discussion on priority/criticality of message transmission, it’s a good time to reflect as to whether your application needs to be fast or feel fast. If it needs to be fast, you may want to skip this section. If it just needs to feel fast, effectively not causing the sending system to back up by not processing data, there are tricks to manage this quickly.

I think the thing about ACKs that many entrants to HL7 don’t exactly understand is that your HL7 ACK/NACK structure isn’t binary. Realistically you should ACK every message that meets your required data criteria. If your app consumes 8 pieces of the ADT message (patient demographics and location), then make sure the message has those 8 pieces and then ACK the message or throw an error immediately. If you have data transformation or other things to do, you should ACK before those steps are all done. Do not wait until the downstream system is done processing. The interface engineer at a health system probably doesn’t care and neither should you. If you aren’t immediately ACK-ing and queueing messages as soon as they can, you should. It won’t help your data flow any faster, but you’ll get fewer complaints from interface admins. In most apps, it’s better to manually reprocess the occasional message for hard edge cases where the message passed validation in the engine but failed in the application layer than to run a universally slower interface processing flow.

Multi-threading an HL7 feed and other interface advice.

If you’ve expanded your hardware and tried these suggestion and your data processing is still running behind, you could also try doing more experimental things to drive throughput. Some ideas:

1) Split the HL7 feed in two and process both halves.

Sometimes the health system might have multiple feeds for their hospitals and having them split it once might do the trick. It’s not uncommon for a health system who merged to have an “old Epic” and a “new Epic” pipe. If the health system can’t handle it, you can try it. This may allow you to parallelize tasks which can lead to more efficiencies.

2) Use tooling to allow multi-threading safely.

Technically speaking you don’t have to process all data synchronously. The sole requirement is really that you can’t process data for a patient out of order. As such, you could hypothetically thread messages by some unique ID (patient or encounter) and increase throughput significantly. Mirth has a feature that does this called Thread Variable Assignment. However, this isn’t without risk. It’s probably a blog topic on its own, but the 5 cent summary is that neither PatientID nor EncounterID are guaranteed to be uniform as to not impact each other. PatientIDs can be impacted by merges (but share the same encounterID) and information within an encounterIDs can be impacted by other encounters. Again, it goes to your use type; if your app doesn’t need to be concerned with merges or in-flight registration changes the risk lowers significantly. Take caution.

Closing

If you’re a vendor, you don’t want to rely on a health system to chop up data for you. You should avoid the situation or just do the work or make it easy for an analyst, not an interface engineer, to make things more efficient.

Please go to the respective sites to ensure current APIs and documentation.

HTTPS is obviously good in regulated environments and there are ways to ensure HTTPS is performant by attempting keepalives or using an HTTPS enabled protocol like Websockets. Remember that 10ms adds up fast when you are doing a million of something.

The semantics of patient movement more than the 3 pillars of Admissions, Discharges and Transfers. The depth of workflow may indicate how much of the ADT feed you may need to eat. But, many of the HL7 message types may only pertain to some very rare cases that probably don’t concern you or that could be coerced into your supported data types using your engine.